Key Takeaways

- Major investment in AI power: OpenAI and NVIDIA are jointly developing data centers with a combined 10 gigawatts of processing capacity.

- Strategic global rollout: The infrastructure will span multiple regions, targeting North America, Europe, and Asia to support global AI deployment.

- Focus on next-generation AI: Enhanced computing power aims to accelerate OpenAI’s development of advanced and accessible AI models.

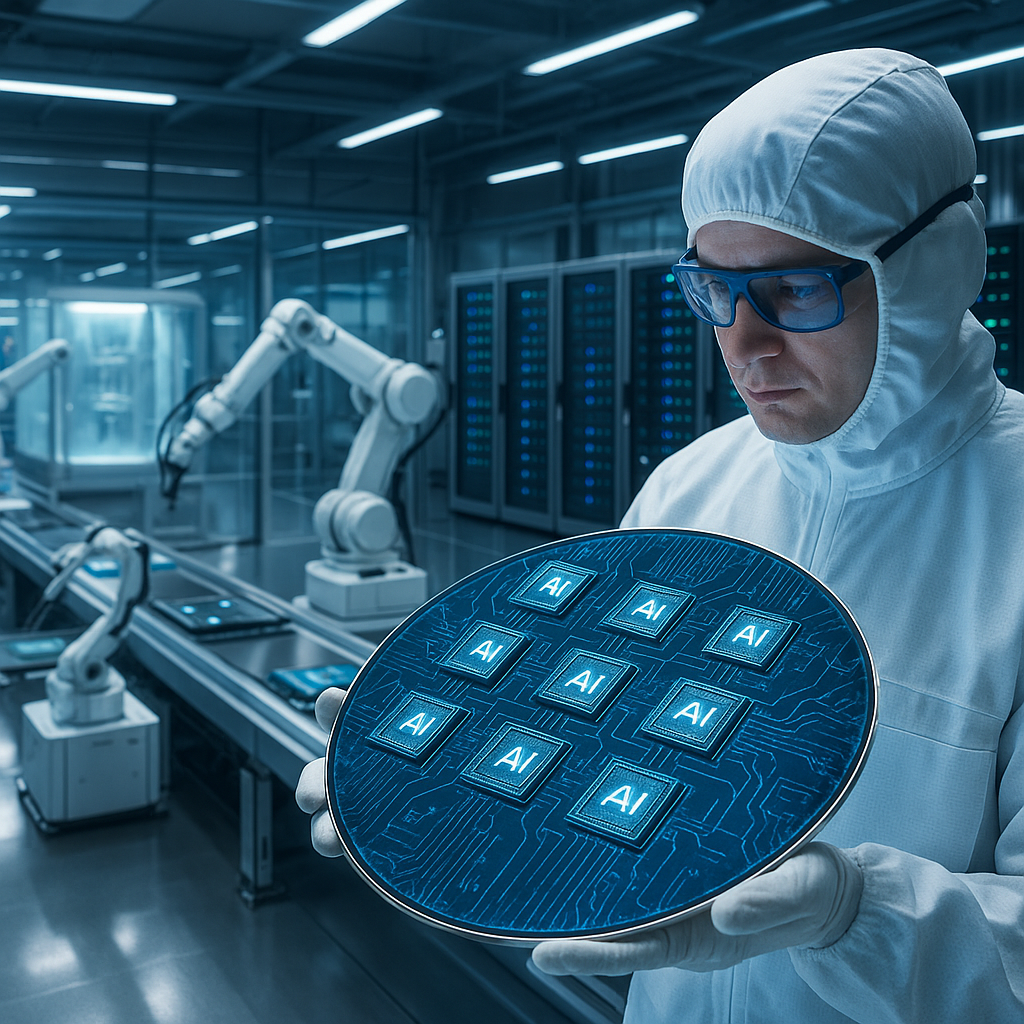

- NVIDIA hardware as the backbone: NVIDIA’s latest GPUs and chipsets will form the technological foundation of the new data centers.

- Industry implications: The partnership signals intensified competition in AI infrastructure, affecting cloud providers and enterprise users.

- Expansion timeline: Initial data centers are scheduled to go live in 2025, with additional phases through 2027.

Introduction

OpenAI and NVIDIA today announced a major partnership to build a global AI infrastructure delivering 10 gigawatts of computing power across North America, Europe, and Asia by 2027. The first data centers are set to go live in 2025. By combining advanced NVIDIA hardware with OpenAI’s next-generation models, the alliance aims to accelerate AI innovation and shape the technology landscape for users and businesses worldwide.

Background

OpenAI and NVIDIA have agreed to collaborate on building data centers with a total of 10 gigawatts of computing power dedicated to AI development and deployment. This large-scale infrastructure investment stands among the most significant commitments to AI computing resources in industry history.

Development of the initial facilities will begin in early 2025, with phased deployment extending through 2027. The data centers will be strategically positioned across North America, Europe, and Asia to optimize global AI services and minimize latency.

NVIDIA CEO Jensen Huang characterized the partnership as “a watershed moment for AI infrastructure.” OpenAI CEO Sam Altman emphasized that the collaboration “provides the foundation necessary for the next generation of AI capabilities.”

Un passo avanti. Sempre.

Unisciti al nostro canale Telegram per ricevere

aggiornamenti mirati, notizie selezionate e contenuti che fanno davvero la differenza.

Zero distrazioni, solo ciò che conta.

Entra nel Canale

Entra nel Canale

Technology Overview

The new data centers will feature NVIDIA’s latest H200 Tensor Core GPUs and forthcoming AI accelerators, connected through NVIDIA’s NVLink high-speed interconnect technology. These specialized chips are designed to handle the demanding computations required to train and run increasingly complex AI models.

Each facility will utilize NVIDIA’s liquid cooling technology, enabling higher computational density and reduced environmental impact. The system architecture is being co-designed by both companies to match OpenAI’s specific requirements.

NVIDIA’s Senior VP of Data Center Products stated that these systems are engineered to deliver unprecedented performance for training foundation models. The infrastructure will support models larger and more capable than GPT-4, with a strong focus on multimodal capabilities.

Financial and Business Implications

This partnership represents a multi-billion dollar investment over the development timeline, though neither company has disclosed exact figures. Analysts estimate the total cost could exceed $30 billion when accounting for hardware, facilities, and operational expenses.

For OpenAI, securing this computing power is critical to maintaining a competitive edge in the fast-moving AI sector. The company has previously faced constraints that limited the pace of research and development.

NVIDIA further secures its dominant role in AI hardware by expanding from chip sales to infrastructure partnerships. Technology analyst Sarah Chen at Morgan Stanley noted that the deal transforms NVIDIA from a vendor to a strategic partner in AI development.

NVIDIA’s latest GPUs and chipsets serve as the core of these high-performance infrastructures, highlighting the rising importance of advanced processors in AI evolution.

Environmental Considerations

The upcoming data centers will incorporate advanced power management systems and will be located in regions with access to renewable energy. Both companies have committed to carbon-neutral operations, with planned measures to offset any residual emissions.

Energy efficiency was prioritized during the design phase. There are claims of a 30% reduction in power consumption per computation compared to present-day data centers. Facilities will also use heat recapture systems to redirect waste heat to nearby buildings or other practical uses.

OpenAI’s Chief Sustainability Officer stated that environmental impacts were considered from the beginning of planning. Environmental assessments will be conducted at each proposed site before construction.

Industry Impact and Competition

The partnership has prompted industry-wide reassessment of AI infrastructure strategies. Competitors such as Google DeepMind and Anthropic are reportedly exploring similar arrangements with AMD and other chip makers.

Major cloud service providers, including Amazon Web Services and Microsoft Azure, may face increased competition for high-performance AI workloads as these new dedicated facilities become operational. Several have already announced plans to accelerate their own infrastructure expansions.

The scale of the collaboration raises questions about access and market concentration. Smaller AI laboratories and startups have expressed concerns about being priced out of advanced development as computing requirements escalate.

AI adoption at this scale heightens the need for robust cloud storage and local storage strategies to manage the ever-growing amount of data processed by AI systems.

What Happens Next

Both companies will begin site selection and permitting in the coming months. Ceremonial ground-breaking for the first facilities is expected by late 2024. Prototype system testing is already underway on a smaller scale within existing infrastructure.

OpenAI plans to use the new computing resources to train sophisticated multimodal AI systems that handle text, images, audio, and video together. The company has indicated that academic partners will be offered research access, although details are still being finalized.

Un passo avanti. Sempre.

Unisciti al nostro canale Telegram per ricevere

aggiornamenti mirati, notizie selezionate e contenuti che fanno davvero la differenza.

Zero distrazioni, solo ciò che conta.

Entra nel Canale

Entra nel Canale

NVIDIA will develop specialized software tools and optimizations for these data centers over the next 18 months. An NVIDIA technical director involved in the project explained that the goal is to create a fully integrated ecosystem tailored for AI.

As industries adopt AI at scale, understanding how AI assistants will integrate into daily life becomes crucial for both enterprises and individuals.

Conclusion

The alliance between OpenAI and NVIDIA represents a significant leap in the quest for AI computing power. It positions both companies to raise performance and efficiency benchmarks across the industry. As global rivals adapt their strategies, outcomes from this partnership could reshape access and influence in advanced AI research.

What to watch: site selection decisions, permitting progress, and key construction milestones anticipated before the end of 2024.

To further explore AI’s trajectory and its societal impact, see the analysis on AI regulation and ethics shaping the next decade as technology continues to evolve.

Leave a Reply